Tensor Processing Units (TPUs)

- 07 Dec 2025

In News:

Recent reports indicate that Meta is in advanced discussions with Google to use its Tensor Processing Units (TPUs), highlighting growing competition in the global AI hardware ecosystem.

What is a TPU?

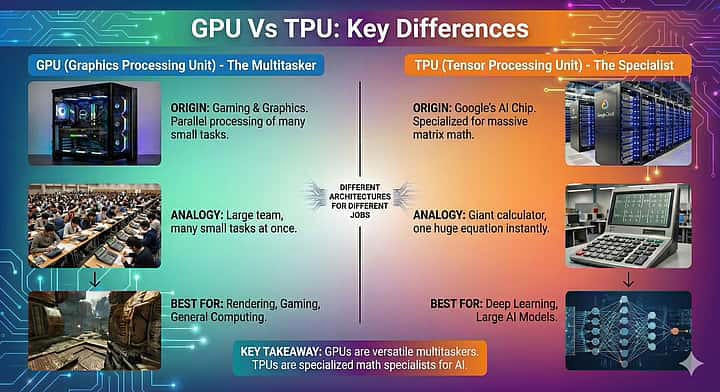

A Tensor Processing Unit (TPU) is a custom-designed semiconductor chip developed by Google to accelerate Artificial Intelligence (AI) and Machine Learning (ML) workloads. Unlike Central Processing Units (CPUs), which handle general computing tasks, or Graphics Processing Units (GPUs), which are versatile and widely used for AI and graphics processing, TPUs are application-specific integrated circuits (ASICs) built specifically for deep learning operations.

Google began developing TPUs in the early 2010s to meet the growing computational demands of AI applications such as Google Search, Translate, Photos, and voice recognition systems. The first TPU was introduced around 2015–16, and multiple generations have since been deployed in Google’s data centres and cloud platforms.

How TPUs Work

AI models depend heavily on tensor operations—mathematical calculations involving multi-dimensional arrays of numbers. Deep neural networks process data through repeated matrix multiplications and tensor algebra, which are computationally intensive.

TPUs are optimised for these operations through:

- Massive Parallelism: They perform a very large number of calculations simultaneously.

- Specialised Architecture: Circuits are tailored for AI workloads, reducing unnecessary processing steps.

- Energy Efficiency: TPUs often deliver high performance with lower power consumption compared to traditional GPUs.

This makes them particularly efficient for training and inference in large-scale AI models.

TPUs vs CPUs vs GPUs

|

Feature |

CPU |

GPU |

TPU |

|

Primary Use |

General-purpose computing |

Graphics & parallel tasks |

AI/ML acceleration |

|

Flexibility |

Very high |

High |

Limited to AI tasks |

|

Optimised for |

Sequential tasks |

Parallel processing |

Tensor/matrix operations |

|

Energy Efficiency for AI |

Low |

Moderate |

High |

Strategic Importance

The reported interest of major AI firms in TPUs reflects a shift in the AI hardware landscape. For years, NVIDIA GPUs dominated AI training and deployment due to their performance and software ecosystem. However, large technology companies are increasingly investing in custom AI chips to reduce costs, improve efficiency, and reduce dependence on external suppliers.

Google has begun offering TPU access through its cloud infrastructure, enabling external firms to run AI workloads on TPU clusters. This signals the rise of merchant AI silicon, where companies design chips not only for internal use but also for commercial deployment.